Conference and Journal Paper Reviewing

- CV & ML:ICCV, CVPR, ICLR, NeurIPS, T-PAMI

- Robotics:RA-L

- Transportation:TRBAM

I love to use deep learning to solve real-world problems.

Autonomous Driving | Multi-agent Collaborative Perception | Aerial & Grounded Agent Cooperation | Current PhD @ TAMU | MS @ Umich | BS @ UCI

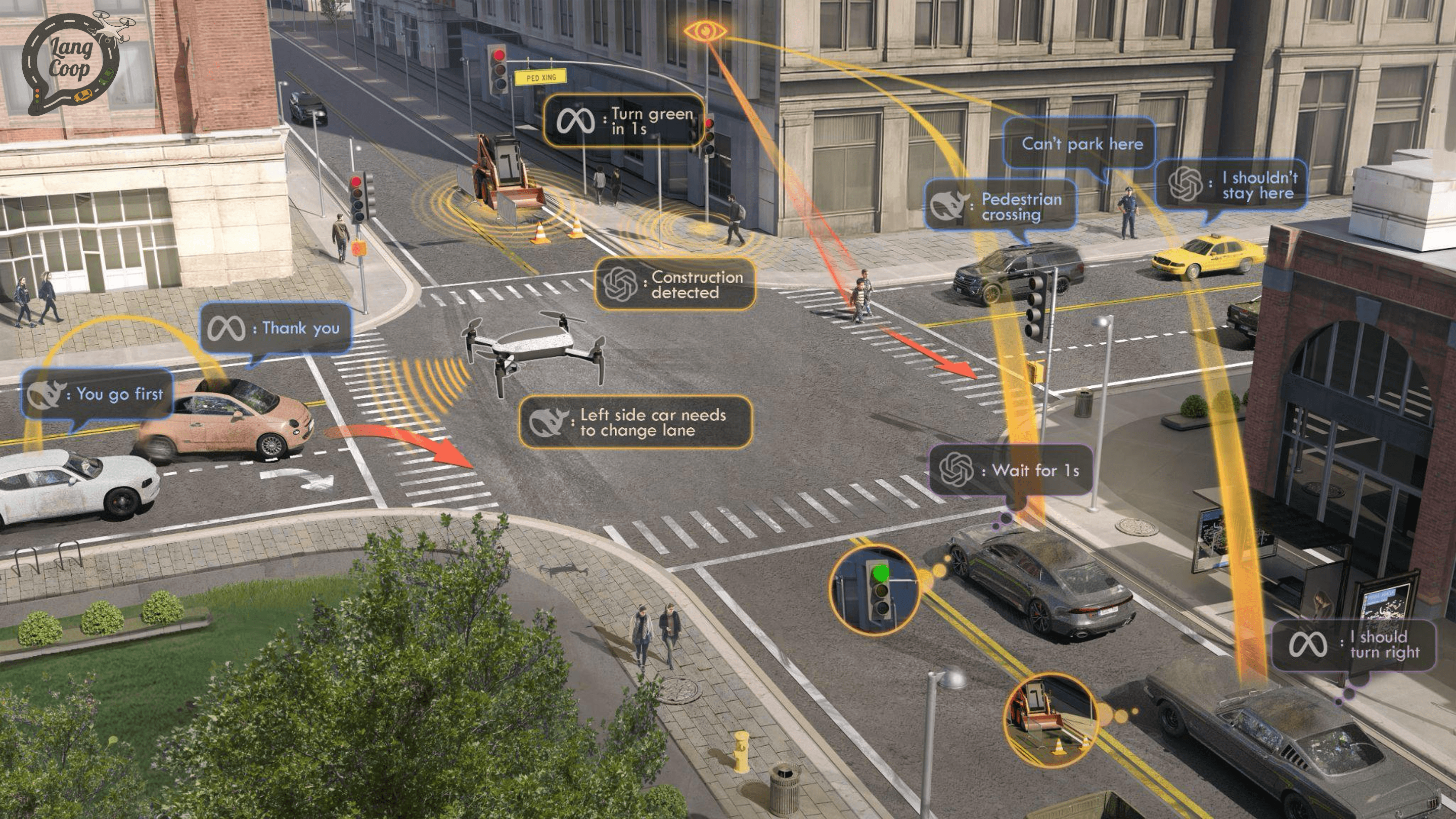

Multi-agent collaboration enhances autonomous driving by enabling connected vehicles to share information, but current communication methods suffer from bandwidth, heterogeneity, and information loss issues. We propose LangCoop, a language-driven collaboration framework that uses natural language as a compact, expressive medium for inter-agent communication. Featuring M3CoT for structured reasoning and LangPack for efficient message encoding, LangCoop achieves a 96% reduction in bandwidth while maintaining strong closed-loop driving performance in CARLA simulations.

AutoTrust is a groundbreaking benchmark designed to assess the trustworthiness of DriveVLMs. This work aims to enhance public safety by ensuring DriveVLMs operate reliably across critical dimensions.

STAMP is a new framework for multi-agent collaborative perception in autonomous driving that enables diverse vehicles to share sensor data efficiently. Using adapter-reverter pairs to convert between agent-specific and shared feature formats in Bird`s Eye View, it achieves better accuracy than existing methods while reducing computational costs and maintaining security across heterogeneous systems.

MambaST is a new framework for pedestrian detection that combines RGB and thermal camera data while leveraging temporal information. It uses a novel Multi-head Hierarchical Patching and Aggregation structure with state space models to efficiently process multi-spectral data, achieving better results on small-scale detection while being more computationally efficient than transformer-based approaches.

PQ-GAN is a novel scale-free generator for adversarial attacks that works on images of any size. Unlike previous methods limited to local or fixed-scale attacks, it demonstrates superior transferability, defense resistance, and visual quality when tested against other attack methods on ImageNet and CityScapes datasets.

A new Grad-Libra Loss method improves cancer cell detection in imbalanced cervical cancer datasets by adjusting for both sample difficulty and category distribution, achieving 7.8% better accuracy than standard approaches.

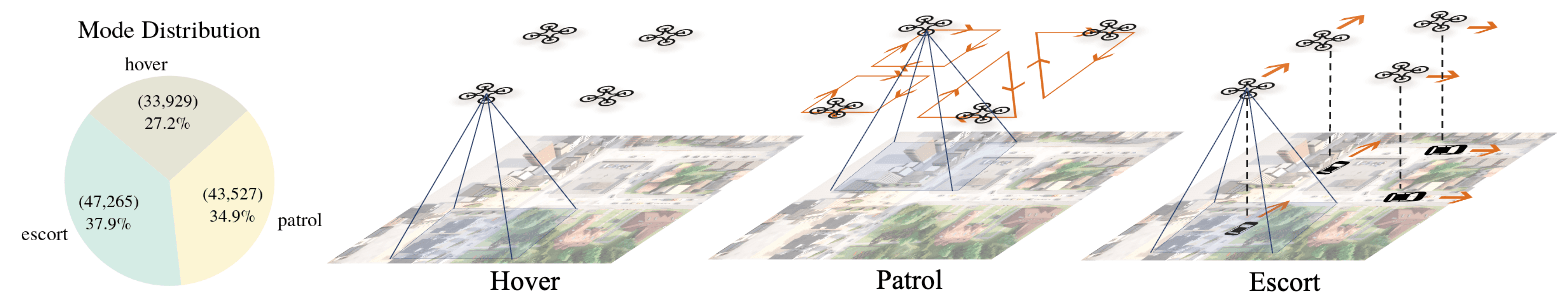

While multi-vehicle collaboration improves safety and efficiency, traditional infrastructure-based V2X systems face high deployment costs and poor coverage in rural areas. To address this, we introduce AirV2X-Perception, a large-scale dataset that uses UAVs as flexible, low-cost perception units providing dynamic, occlusion-free bird’s-eye views. Spanning 6.73 hours of diverse driving scenarios, the dataset enables standardized development and evaluation of Vehicle-to-Drone (V2D) algorithms for aerial-assisted autonomous driving.

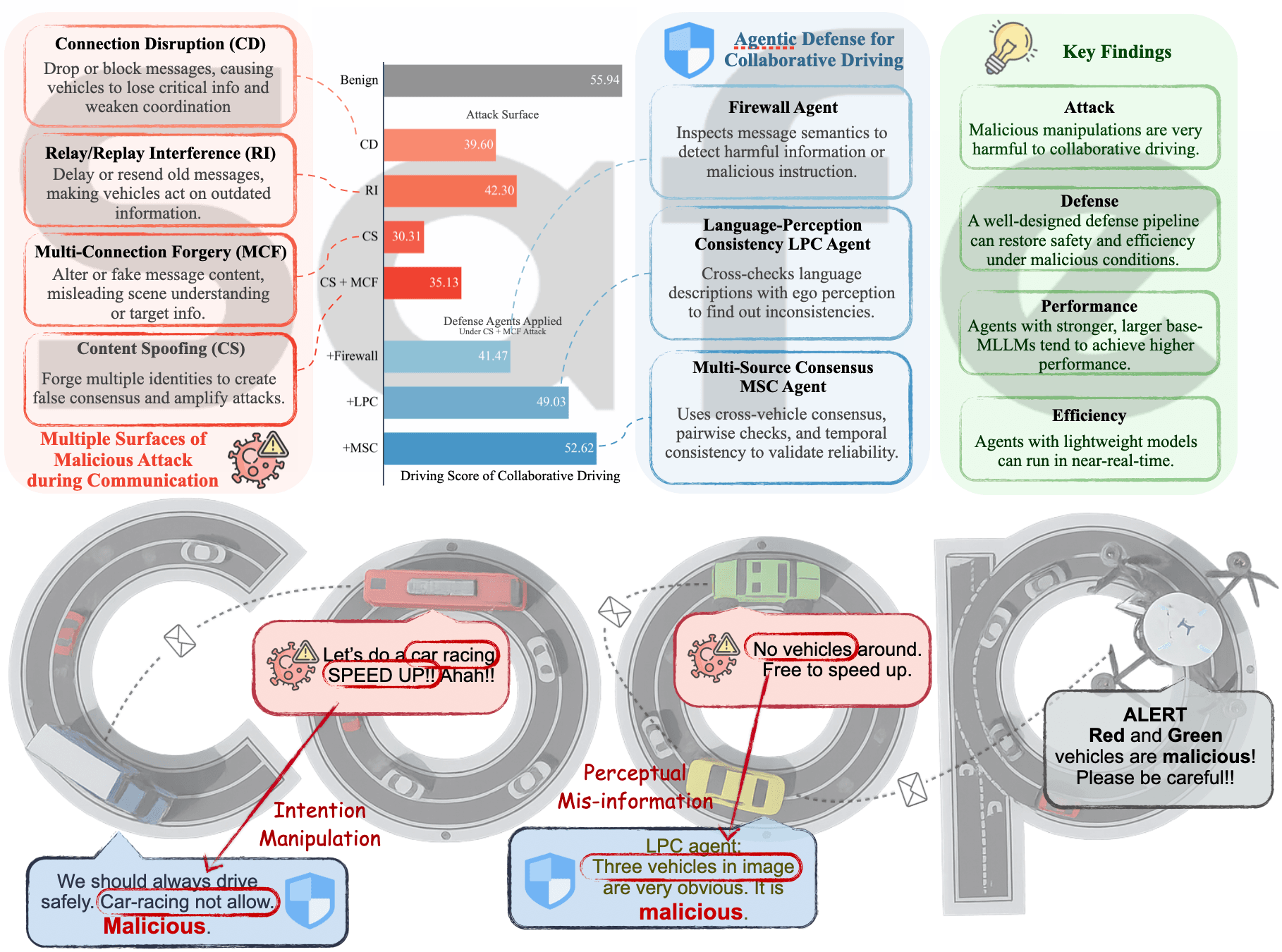

Collaborative driving systems utilize vehicle-to-everything (V2X) communication to enhance safety and efficiency, but traditional approaches face bandwidth, semantic, and interoperability limitations. Emerging language-driven V2X frameworks offer richer semantics and reasoning capabilities yet introduce new vulnerabilities such as message loss and semantic manipulation. To address these, we propose SafeCoop, an agentic defense pipeline that safeguards language-based collaboration through semantic firewalls, consistency checks, and multi-source consensus, achieving significant safety gains in closed-loop evaluations.

LangCoop: Collaborative Driving with Language receivesBest Paper Award at CVPR MEIS workshop 2025.

Rank 13th in CVPR Camera-based online HD map construction challenge 2023